Have you come across the artificial intelligence music generator MuseNet, and are you wondering how MuseNet works? Well, you have landed at the right place. In this article, let us see about MuseNet demo.

MuseNet is based on a deep neural network using Sparse Transformer kernels. To model its training data, it examines MIDI files and creates an internal musical vocabulary. In order to predict the following token in a sequence, whether audio or text, GPT-2, a large-scale transformer model, is trained to use the same general-purpose unsupervised technology as MuseNet.

In MuseNet demo, Launch MuseNet > Click Try MuseNet > Simple Mode > Select composer and style > MuseNet creates three variations or Go to advanced mode > MuseNet produces four variations > Click Reset or update to generate new variations.

Continue reading further to understand in detail about MuseNet demo and how to use the demo version to create music within a few taps.

MuseNet Demo

In MuseNet demo, Launch MuseNet > Click Try MuseNet > Simple Mode > Select composer and style > MuseNet creates three variations or Go to advanced mode > Choose style, instrument > MuseNet produces four variations > Click Reset or update to generate new variations.

MuseNet can produce musical compositions lasting four minutes that feature ten distinct instruments, and 15 different styles, and blend genres ranging from country to the Beatles to Mozart. MuseNet was not specifically designed with our understanding of music in mind; instead, it learned to anticipate the next token in hundreds of thousands of MIDI files and thereby discovered patterns of harmony, rhythm, and style. We have provided you with a few MuseNet samples:

1. The model is instructed to create a pop song using the piano, guitar, bass, drums, and the first six notes of a Chopin Nocturne. The entire band enters at about the 30-second mark, and the model successfully combines the two styles.

2. Here, the model is given the prompt, with Bluegrass piano, guitar, bass, and drums.

3. This sample is done using a Rachmaninoff piano. By starting with a prompt like a Rachmaninoff piano, we may train the algorithm to produce samples in a specific genre.

4. Musenet can create simple musical melodic structures, as in this sample imitating Mozart in the first 6 notes.

5. MuseNet can also train a 72-layer network with 24 attention heads, paying close attention to a 4096-token context. Hence, it can recall a piece’s long-term structure, as in the following Chopin imitation, due to the extended context.

How To Use A Demo On MuseNet?

The system, according to the experts working on the project, can listen to music for extended periods of time and comprehend the overall context of a song’s melodies rather than just how they flow together in a brief portion. They use MIDI (Musical Instrument Digital Interface) files as their prompts and use artificial intelligence to construct new sections rather than converting words into images. The rendered output is in MIDI format. Remember, this project is not open source and you cannot find it on GitHub. Now, we shall take you through the MuseNet Demo available on OpenAI’s official MuseNet page, which consists of two modes namely simple mode and advanced mode using which we can create a song!

Step 1: Open MuseNet.

Step 2: “Simple Mode,” which is shown by default, under the item “Try MuseNet,” where you can listen to a ton of pre-generated, random, untreated samples.

Step 3: The user has the option to start the creating process by selecting a composer or style.

Step 4: Then, MuseNet generates three variations.

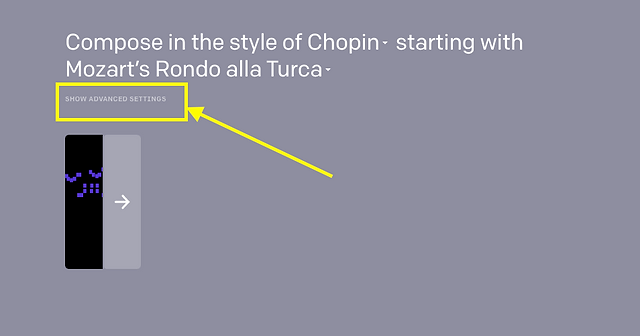

Step 5: Next, you can communicate with the model directly in the advanced mode (“Advanced Settings”) and create brand-new creations.

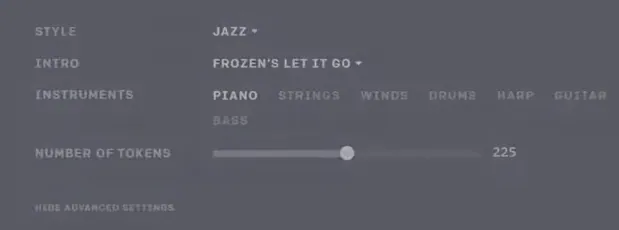

Step 6: MuseNet allows you to choose the style, intro, instrument, and number of tokens. Here, the number of tokens refers to the clip size.

Step 7: Now, MuseNet generates music in the advanced version in a few seconds.

Step 8: You can also click on the OpenAI icon to generate more music. MuseNet can generate up to four variations.

Step 9: To generate new variations, if you don’t like these, click “reset” or “update.”

With these steps, you can easily create music using the MuseNet demo.

Wrapping Up

We have come to the end of the post. Deep learning algorithms based on in-depth data analysis are the foundation of AI music generators. You gather a lot of music tracks, process them, and then feed the AI with this information. We hope this article has given you a clear explanation of the MuseNet demo. For more informative and interesting articles, check out our website at Deasilex.

Frequently Asked Questions

Q1. How Long Does Jukebox AI Take?

Ans. They estimate that each 20-second music sample will take about 10 minutes, but be ready for it to take up to 20 minutes. You can access the music you just created on your Google Drive once this section of code has finished running!

Q2. Will AI Replace Musicians?

Ans. Although AI won’t replace musicians in relaying sensory experiences through music, understanding and utilizing it may become increasingly important for musicians and everyone else involved in the present music industry.

Q3. Is MuseNet A Creative AI?

Ans. Like any AI system that makes music, art, or prose, MuseNet isn’t as imaginative or innovative as a real musician. It involves identifying patterns in previously completed works and then reciting some statistical variation.

Q4. What Is A Music API?

Ans. An API for a music database is what? A web service or REST API that retrieves and returns music information, including song tracks, lyrics, artist, or album information, is typically referred to as a music database API. Deezer, iTunes, musiXmatch, and others are examples of well-known music database APIs.

Q5. How Is AI Used In Music?

Ans. AI creates constantly changing soundscapes for focus and relaxation, powers streaming services’ recommendation systems, facilitates audio mixing and mastering, and creates music with no copyright problems.